Algorithms, the digital decision-making tools at the core of some of the most successful and powerful technologies of the modern era, don’t come to conclusions in a vacuum. Their predictive abilities come with tradeoffs determined by the conscious and unconscious biases that their creators have imbued them with.

With the stakes so high, and with factors so complex, computer scientists and technology companies must start taking a deeper look into how their algorithms balance accuracy with fairness and a host of other social concerns.

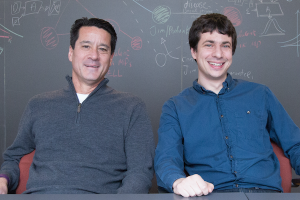

Michael Kearns, founding director of the Warren Center and National Center Professor of Management & Technology in Penn Engineering’s Department of Computer and Information Science (CIS), and fellow Warren Center member Aaron Roth, Class of 1940 Bicentennial Term Associate Professor in CIS, outline this goal — and ways to achieve it — in their book, The Ethical Algorithm.

Daniel Akst of the Pennsylvania Gazette recently spoke with Kearns about the tradeoffs that ethical algorithms entail:

Your book is about the need for ethical algorithms. What gives an algorithm moral properties? How can a set of computer instructions run into ethical or moral issues?

It’s about outcomes. These days an algorithm could be making lending decisions or criminal sentencing decisions, and could produce socially undesirable outcomes. For example, a lending algorithm could produce a certain number of unwarranted loan rejections, and the rate of these could be twice as high for Black applicants as for white ones. If we saw that behavior institutionally in a human lending organization, we would say something in this organization is resulting in apparent racial discrimination. Algorithms can do the same thing.

Do algorithms do such things on purpose?

Computers are precise. They do exactly what they are told to do, and they do nothing more and nothing less. And that precision is what has gotten us into the problems that we talk about in the book.

When you tell an algorithm to find a predictive model from historical data that is as accurate as possible, it’s going to do that, even if that model exhibits other behaviors that we don’t like. So it could be that the most accurate model for consumer lending overall produces disparate false rejection rates across different racial groups, and we would consider that racial discrimination. What we are advocating in the book is that the entire research community thinks about these problems. The fix for this is to be precise about the other goals that we have. When we said maximize the predictive accuracy, we didn’t realize that might result in a racially discriminatory model. So we have to go back and mention our other objective precisely in the algorithm. We have to say something akin to: “OK, maximize the predictive accuracy, but don’t do that if it results in too much racial disparity.”

Continue reading at the Pennsylvania Gazette.