Crowdsourcing Gun Violence Research

Gun violence is often described as an epidemic, but as visible and shocking as shooting incidents are, epidemiologists who study that particular source of mortality have a hard time tracking them. The Centers for Disease Control is prohibited by federal law from conducting gun violence research, so there is little in the way of centralized infrastructure to monitor where, how, when, why and to whom shootings occur.

Chris Callison-Burch, Aravind K. Joshi Term Assistant Professor in Computer and Information Science, and graduate student Ellie Pavlick are working on a way to solve this problem.

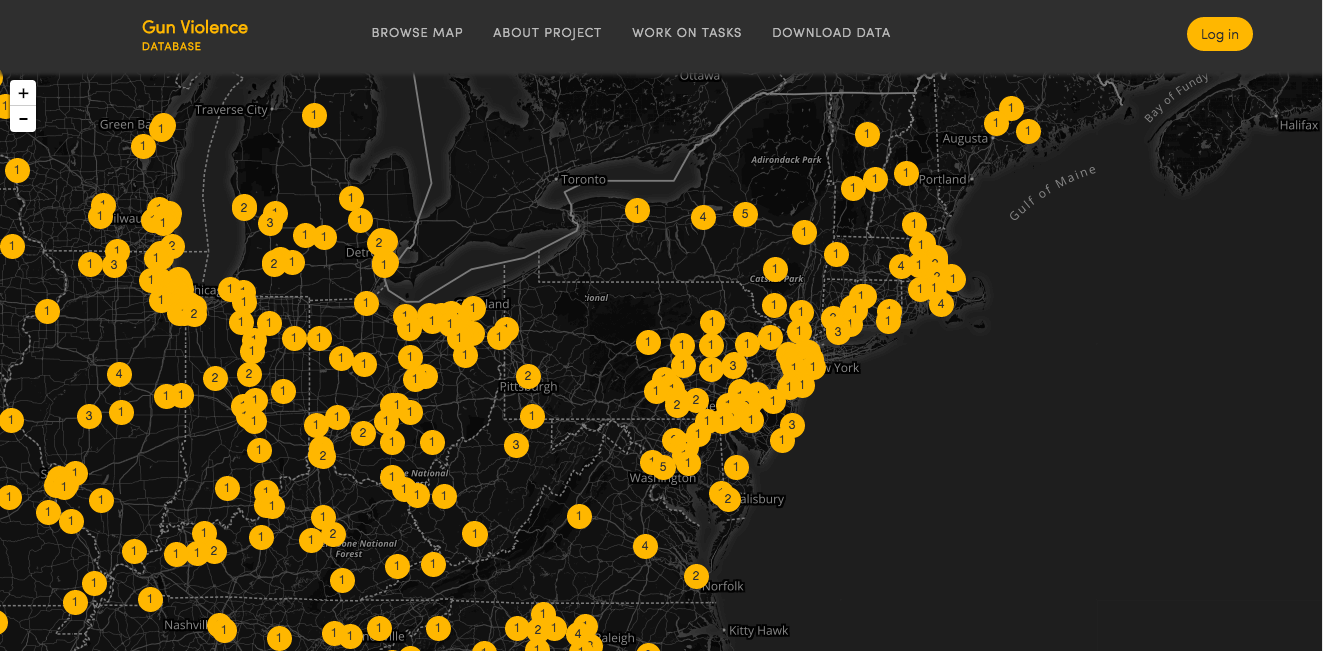

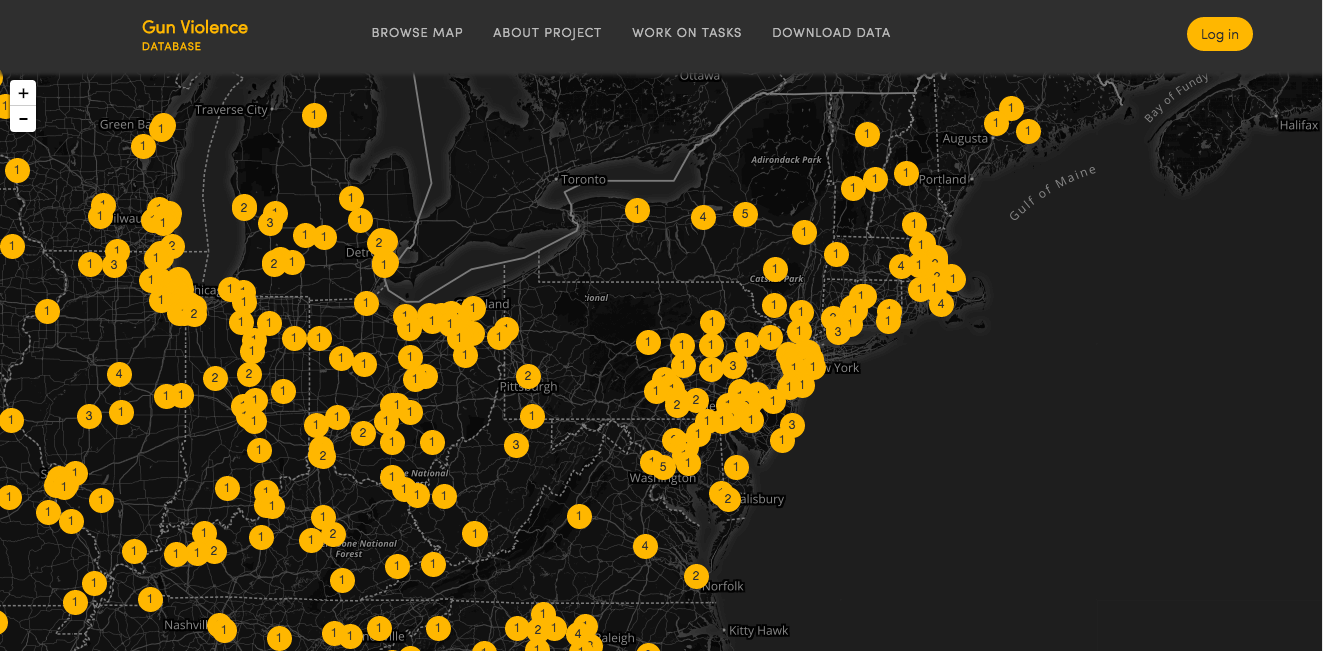

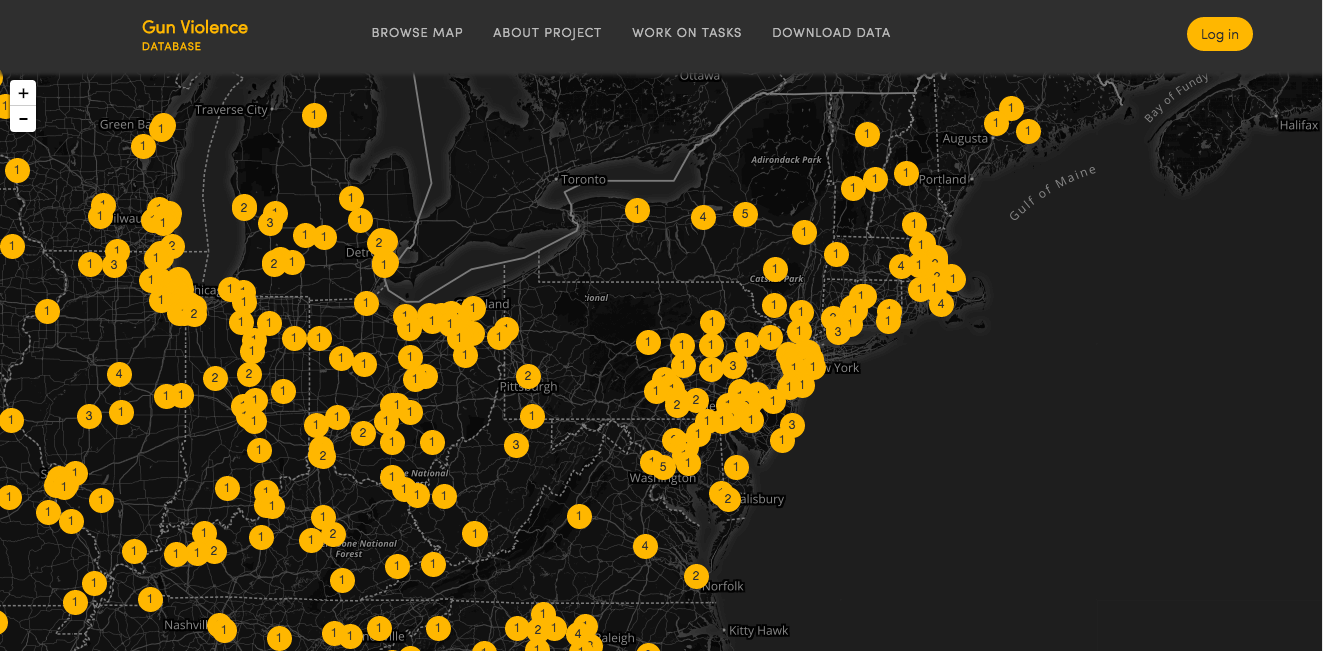

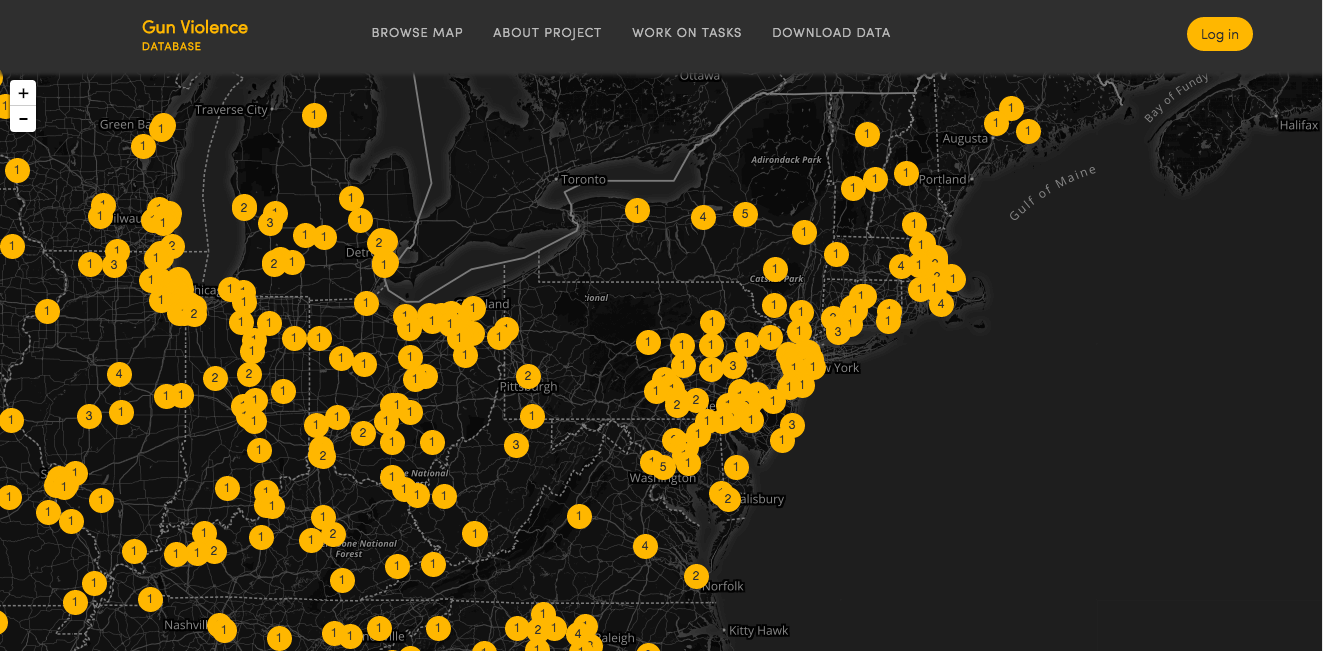

They have developed the Gun Violence Database, which combines machine learning and crowdsourcing techniques to produce a national registry of shooting incidents. Callison-Burch and Pavlick’s algorithm scans thousands of articles from local newspaper and television stations, determines which are about gun violence, then asks everyday people to pull out vital statistics from those articles, compiling that information into a unified, open database.

For natural language processing experts like Callison-Burch and Pavlick, the most exciting prospect of this effort is that it is training computer systems to do this kind of analysis automatically. They recently presented their work on that front at Bloomberg’s Data for Good Exchange conference.

The Gun Violence Database project started in 2014, when it became the centerpiece of Callison-Burch’s “Crowdsourcing and Human Computation” class. There, Pavlick developed a series of homework assignments that challenged undergraduates to develop a classifier that could tell whether a given news article was about a shooting incident.

“It allowed us to teach the things we want students to learn about data science and natural language processing, while giving them the motivation to do a project that could contribute to the greater good,” says Callison-Burch.

The articles students used to train their classifiers were sourced from “The Gun Report,” a daily blog from New York Times reporters that attempted to catalog shootings from around the country in the wake of the Sandy Hook massacre. Realizing that their algorithmic approach could be scaled up to automate what the Times’ reporters were attempting, the researchers began exploring how such a database could work. They consulted with Douglas Wiebe, a Associate Professor of Epidemiology in Biostatistics and Epidemiology in the Perelman School of Medicine, to learn more about what kind of information public health researchers needed to better study gun violence on a societal scale.

From there, the researchers enlisted people to annotate the articles their classifier found, connecting with them through Mechanical Turk, Amazon’s crowdsourcing platform, and their own website, http://gun-violence.org/.

The database still needs a human touch to catch linguistic idiosyncrasies that computers can’t yet make sense of. An article about a photography exhibit, or a drinking contest, might make liberal use of the word “shot,” fooling the algorithm to think the story was describing a crime. Humans must also still determine when different news stories are about the same incident, to ensure the same shooting isn’t counted multiple times, and to manually fill in the data that epidemiologists need.

Where possible, annotators enter the name, age, gender, and race of both the shooters and victims, and answer other questions related to the circumstances of the incident, such as the number of shots fired, whether the shooting was part of another crime, or whether the gun was stolen.

This “information extraction” phase is the closest to being automated.

“This is like what Google tries to do with some queries,” Callison-Burch says. “If you ask how tall a celebrity is, or where was he or she was born, it will try to give you the answer directly. These kinds of questions are easier than what we’re asking, because information on celebrities can usually be found on Wikipedia in a structured way, in an info box at the top of the article.

“When our Mechanical Turk workers and volunteers do information extraction tasks, we’re essentially generating that kind of info box for the article. Then we can learn the mapping from that structured info into the unstructured information in the article itself.”

Callison-Burch and Pavlick are planning to present an academic paper describing their progress on improving the information extraction phase within the next few months.

In the meantime, they are still looking for volunteers to help build the database and train their algorithms.