Combatting ‘Fairness Gerrymandering’ with Socially Conscious Algorithms

Decision-making algorithms help determine who gets into college, is approved for a mortgage, and anticipate who is most likely to commit another crime after being released from jail. These algorithms are made by programs that ingest massive databases and are instructed to find the factors that best predict the desired outcome.

Both the people who write and who use these algorithms understand that the decisions they produce are not always fair. Bias against race, gender, religion, sexual orientation — almost any subgroup status — can be present in the data, the way the computer draws relationships between data points, or both. This leads to bad predictions, in the form of both false positives and false negatives, that inordinately cluster in some subsets of the population in question.

Penn’s Warren Center for Network and Data Sciences is working on this problem.

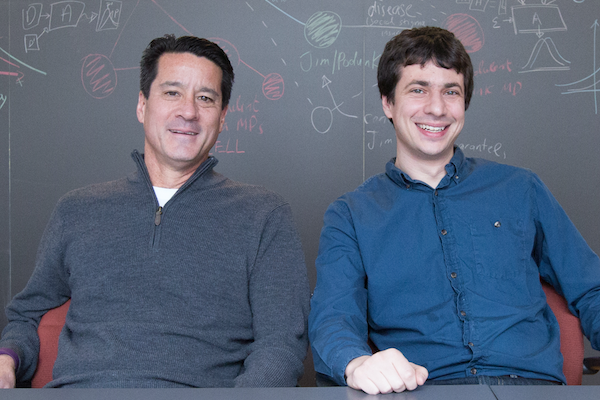

Michael Kearns, founding director of the Warren Center and National Center Professor of Management & Technology in Penn Engineering’s Department of Computer and Information Science (CIS), and fellow Warren Center member Aaron Roth, Class of 1940 Bicentennial Term Associate Professor in CIS, are interested in imbuing these decision-making algorithms with social norms, including fairness. They’re interested in one particularly vexing issue: Algorithms that take fairness into account can have the paradoxical effect of making their outcomes particularly unfair to one subgroup.

This is known as “fairness gerrymandering.”

Like a political party that draws districts such that a critical majority of their opponents’ voters are concentrated in one spot, an algorithm can meet fairness constraints by unintentionally “hiding” bias at the intersection of the multiple groups it’s asked to be fair to.

A malicious person could achieve similar results on purpose. A racist country club owner, for example, could comply with fairness-in-advertising regulations by only showing ads to minorities who live far away and who could not afford the dues. Taken at face value, the owner has complied with the letter of the law, but still produced results that are unfair.

Algorithms are susceptible to producing the same sort of biased outcome due to the intrinsic trade-off that predictive algorithms have to make between fairness and accuracy.

Imagine an algorithmic classifier that connected students’ SAT scores and high school GPAs to their college graduation rates. Colleges might think such a classifier would be the most fair and objective way to predict which new applicants will do best and admit them accordingly. However, a “race-blind” algorithm — one tasked to maximize accuracy across the entire population of students — might inadvertently return results that are unfair to minorities.

Black students, through the institutionalized racism of poorer schools and less access to private tutoring, might tend to have lower GPA and SAT scores than their white counterparts, though the relationship between those scores and their college performance is just as strong, if not stronger. In that scenario, the algorithm would incorrectly reject black students at a higher rate simply because they are a minority: they have fewer data points and thus a smaller effect on the classifier.

One approach existing algorithms use to avoid this type of unfairness is to stipulate that that the false-negative rates for each subgroup be equal. But this problem gets trickier and trickier as the number of subgroups an algorithm is tasked with considering is increased. This is where fairness gerrymandering comes into play.

“We might ask an algorithm to make this assurance of fairness to populations based on, say, race, gender and income, all at the same time,” Roth says. “And the algorithms will make false-negative rates equal on race, the false-negative rates equal on gender, and the false-negative rates equal on income. The problem is, when we look at the false-negative rate for poor, black women, it’s extremely unfair. The algorithm has essentially cheated.”

Critically, algorithms that do this type of fairness gerrymandering aren’t designed to do this on purpose; they just haven’t been explicitly told not to. Since they can meet the harder constraints by spreading out unfairness over the three groups they have been explicitly asked to protect, they do not need to consider that they have concentrated all that unfairness in the place where those three groups intersect.

Kearns, Roth and other Warren Center researchers, including doctoral student Seth Neel, and former doctoral student Steven Wu, are currently writing algorithms that do explicitly counteract fairness gerrymandering. Preliminary work on the subject is already on arXiv.org for other machine learning researchers to begin validating.

“We demonstrate theorems that prove that this algorithm provides protections against fairness gerrymandering,” Kearns says. “We also demonstrate experiments that show our definition of fairness in action. With every constraint you add you lose some accuracy, but the results remain useful. There’s a cost and a tradeoff, but there are sweet spots on real data sets.”

The key to the Warren Center’s efforts in this field is to synthesize knowledge from the relevant domains in law, philosophy, economics, sociology, and more, and to match it with the way computers “think” and behave.

“Traditional approaches to fairness, like regulations and watchdog groups, are important, but we’re trying to prevent discrimination directly in the algorithms,” Kearns says. “We’re not saying this is going to solve everything, but it’s an important step to get human social norms embedded in the code and not just monitor behavior and object later. We want the algorithms to be better behaved.”