The Center for Innovation and Precision Dentistry (CiPD), a collaboration between Penn Engineering and Penn Dental Medicine, has partnered with Wharton’s Mack Institute for … Read More ›

The Center for Innovation and Precision Dentistry (CiPD), a collaboration between Penn Engineering and Penn Dental Medicine, has partnered with Wharton’s Mack Institute for … Read More ›

In 2019, Michael Kearns, National Center Professor of Management & Technology in Computer and Information Science (CIS), and Aaron Roth, Henry Salvatori Professor in Computer … Read More ›

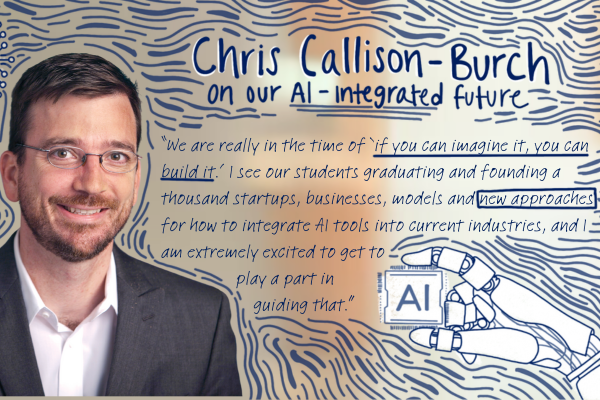

Through programs like the Raj and Neera Singh Program in Artificial Intelligence, the first Ivy League undergraduate degree of its kind, Penn Engineering is addressing … Read More ›

The technological landscape is changing at a rapid pace, faster than ever before, leaving almost no career path untouched. In addition, professionals looking to augment … Read More ›

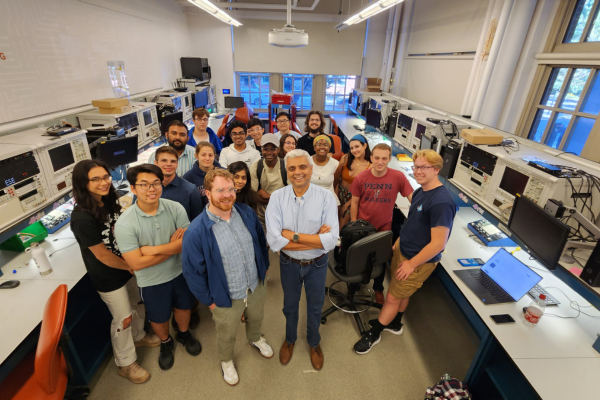

Mark G. Allen has been named Chair of the Department of Electrical and Systems Engineering. Allen is the Alfred Fitler Moore Professor in Electrical and … Read More ›

When engaging in virtual reality (VR) and manipulating an object, users want to be able to feel what they’re touching in virtual space. A hard … Read More ›

With a generous gift to Penn Engineering, longtime Penn professors Daniel E. Koditschek and his wife, Anne M. Teitelman, chose to turn a dark time … Read More ›

![Rewriting the Script: Developing Effective AI Assistants [photo of writer/study?] Alyssa Hwang is a Ph.D. student in the Department of Computer and Information Science (CIS) working in the Natural Language Processing group (Penn NLP) and the Human Computer Interaction group (Penn HCI), where she develops AI assistants that effectively deliver complex information. She is advised by Professors Chris Callison-Burch, Associate Professor in CIS, and Andrew Head, Assistant Professor in CIS. "This is so hard," Caroline* griped as she bustled around her kitchen. I scribbled furiously on my clipboard from my seat in an unobtrusive corner. As part of my research, I was observing Caroline cook sesame pork Milanese with an Amazon Alexa Echo Dot, and she was struggling to find the right equipment and ingredients. "How many eggs for this recipe?" she asked into the air. Alexa did not respond. This had happened a few times already. "Alexa," Caroline tried again. "How many eggs for this recipe?" "The recipe calls for two large eggs, total," Alexa finally replied. If you've ever tried asking Alexa or Google Home a question, then you have probably noticed that modern voice assistants tend to find written sources from the web and read them aloud with few modifications, like a script. If you've ever felt impatient listening to the assistant read a few sentences from a website, imagine listening to an entire recipe. Caroline was struggling to do exactly that as she cooked her dish. Right before this moment, Alexa had told her: “Whisk the eggs, half teaspoon sesame oil, and a pinch each of salt and pepper in a second dish. If you want me to repeat this step, or list the ingredients for the step, just let me know.” This step comes from a real Food Network recipe. It may seem relatively straightforward on paper, but Caroline ended up asking for it to be repeated twice. She struggled to figure out how many eggs she needed and even grumbled out loud about the difficulty. What went wrong? I was on a mission to find out. Caroline was one of twelve research participants helping me learn how voice assistants can effectively guide people through complex tasks, like cooking. The participants represented a range of cooking skill levels and prior experience using voice assistants. One participant, Hugo, had never even cooked a meal on his own before. Others, like Bianca, moved through the kitchen with practiced ease, whipping up complicated dishes like eggless red velvet cake and egg biryani. All participants in the study faced a variety of challenges during their sessions, which took place in their own homes. One subtle but substantial challenge was an overall lack of awareness throughout Alexa's guidance. Unlike reading a written recipe, listening to a recipe being read aloud takes away your control over the pace of information. You completely rely on the voice assistant to give you all of the information you need — but not too much — at the right time. That's a tall order. In Caroline's case, the biggest problem was that Alexa was trying to have her do too much at once. She had to gather the ingredients, measure the correct amounts, and combine them in a "second dish," which she also needed to retrieve. On top of that, Alexa didn't tell her how many eggs she needed and didn't respond the first time she tried to ask. Since Caroline was working with an audio-only device without a copy of the original recipe, she couldn't scan the recipe at her own pace or jump back to the ingredients list to check for the number of eggs. Caroline liked her sesame pork Milanese in the end, but she was disappointed by her cooking experience. Hugo struggled immensely to search for information in a sausage and veggie quiche recipe. He already knew when he started that he would need to preheat the oven, but he wasn't sure about the correct setting. At one point, Hugo asked, "Alexa, should I preheat the oven?" and Alexa responded with incorrect information from an external website: "From linguazza.com, start by heating the oven to 400 degrees." He eventually gave up and made a "wild guess." (It was wrong.) It turned out that the information he needed was buried all the way in step 6 but he had no way of knowing ahead of time. Hugo wasn't the only one who seemed lost while cooking with Alexa. Adrian, who cooked steaks with blue cheese butter, felt a stark difference between reading a recipe and listening to one. "Recipes are generally written pretty linearly," he explained, "so you're either going to go up or down, forwards or backwards. There's no map when you're doing it all by audio." Alexa's abilities were also unclear, leading another participant to conclude that "you cannot expect it to answer any questions you ask. You need to think, 'Okay, I have this problem, and in what way can it assist me.'" Fortunately, we already have a way forward. In my lab’s paper recently published at the ACM Conference on Designing Interactive Systems, a premier venue focused on interactive systems design and practice, we propose ways for voice assistants to transform written sources for better spoken communication — in other words, "rewrite the script." First, voice assistants can start by directly and proactively telling users about the features they provide and how they can use them. They can also reorder time-sensitive steps so they are heard earlier during the process. Finally, assistants can split complex steps into substeps, summarize long portions, elaborate on key details, provide visual information for additional clarity, and redistribute information that has been scattered across the page. Many of these strategies are now possible given the recent advancements in generative AI models like ChatGPT. While past work relied on intricate rule-based methods, careful fine-tuning, or laborious human annotation efforts, new large language models (LLMs) handle complex tasks that we could barely imagine a few years ago with impressive sophistication. These new LLMs could take on many of the editing tasks — and more — in the backend to help us build more usable systems. The new age of AI has redefined our relationships with computers, which is the central focus of my Ph.D. thesis. My work with Alexa is just the first part of my thesis. Next, I'm focusing on leveraging LLMs to revamp audiobooks, from describing images and non-textual content to navigating more seamlessly through spoken text. I am also a strong believer in inclusive design: including participants representing a wide range of vision loss, neurodiversity and many core communities is an important part of my future projects. Voice assistants and LLMs have a bright future ahead of them. We just need to write the script. *All names have been changed to protect participant anonymity. Quotes have been lightly edited for brevity and clarity.](https://blog.seas.upenn.edu/wp-content/uploads/2023/09/Alyssa-photo-hires-scaled-e1695322040840-780x520.jpg)

Alyssa Hwang is a Ph.D. student in the Department of Computer and Information Science (CIS) working in the Natural Language Processing group (Penn NLP) and … Read More ›

Large amounts of data are becoming more accessible every day in almost all industries. Electrical and system engineering skills such as developing nanoscale devices, designing … Read More ›

It may not always be apparent to the naked eye, but your yearly ophthalmology exams are at the forefront of two emerging trends in health … Read More ›