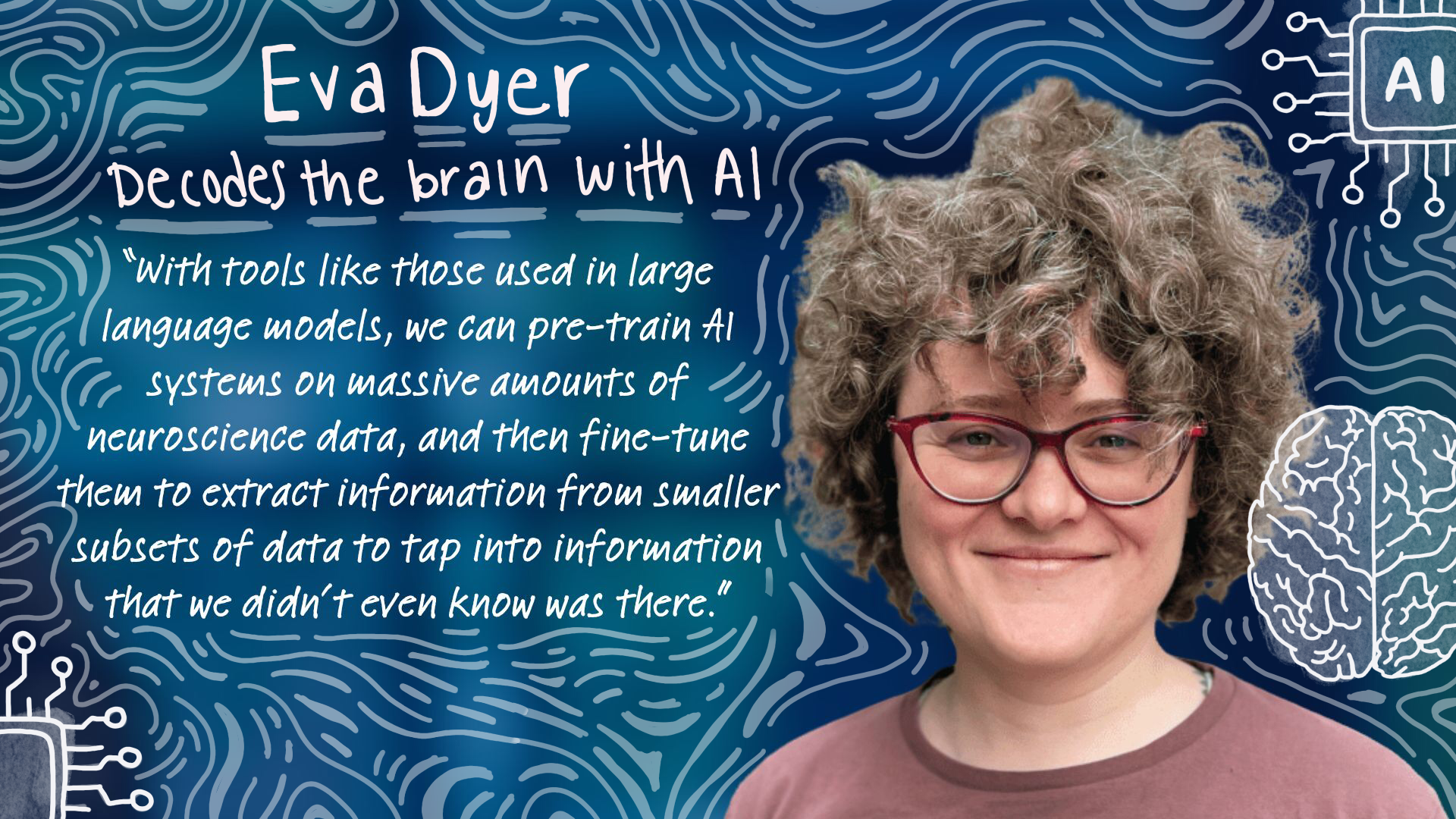

When Eva Dyer, Rachleff Associate Professor in Bioengineering and in Computer and Information Science, talks about the brain, she doesn’t just talk like a neuroscientist. She speaks with the rhythm of someone who listens deeply. Once a jazz singer and multi-instrumentalist, Dyer now orchestrates another kind of harmony: finding the hidden signals in our brains, with the help of artificial intelligence.

“As a kid, I didn’t have a lot of exposure to what a scientist was or what they looked like,” says Dyer. “I was the first in my family to receive a higher education. But, I didn’t set out to be a scientist; I just followed the questions I was curious about.”

One of those early questions was how do we interpret music?

In high school, Dyer was immersed in music. She sang jazz, played piano and drums, and experimented with many other instruments. At the same time in her physics class, she was learning about sound and vibration.

“It blew my mind that physics and math could explain the things I loved most,” she says. “There was something magical about realizing that sound, emotion and science weren’t separate, they were deeply connected.”

That connection became her compass. Dyer majored in Audio Engineering as an undergraduate at the University of Miami, a multi-disciplinary major across schools where she received a dual degree in both the School of Engineering and School of Music. With that degree, she thought she might become an acoustic consultant who would help design speakers and architecture to elevate sound and music. But a single concept — auditory perception, or how our brain hears and interprets sounds in the world around us — shifted everything.

“It made me realize the brain is a key part of how we experience sound,” she says. “That launched me into neuroscience.”

From there, Dyer followed her instincts and data. She leaned into machine learning and signal processing, developing the computational tools to analyze how the brain responds to complex environments. This was before people started to join the AI and neuroscience fields together, but she could see where the interdisciplinary potential was going.

“When I started grad school, I knew I wanted to use machine learning to understand the brain, but at that time the fields of data science and neuroscience hadn’t really come together yet,” she reflects. “Building a foundation in applied math and signal processing was an important stepping stone for me. By the time I finished my Ph.D. in 2014, new high-resolution methods were producing massive data sets, and it was an exciting moment to bring deep learning into neuroscience, which is exactly what I focused on in my postdoc. Fast forward eight years, and now we have a thriving field that combines AI and neuroscience, while also asking how studying the brain can inform AI itself. It’s amazing to see how far things have come.”

Today, Dyer’s lab at Penn sits at the intersection of neuroscience and AI. She works closely with collaborators in Penn Medicine and across disciplines to decode the brain’s signals, including everything from intention and movement to mental health symptoms, using powerful machine learning techniques.

“With brain-computer interfaces, we’re trying to listen in on the brain to translate thought into action. This could result in helping someone living with paralysis regain control of movement or speech,” Dyer explains. “But to do that well, we are finding that we need a larger scale of data. With tools like those used in large language models, we can pre-train AI systems on massive amounts of neuroscience data, and then fine-tune them to extract information from smaller subsets of data to tap into information that we didn’t even know was there.”

In other words, by scaling up and training on more diverse data sets from different animals, experimental setups and across varying mental states, Dyer’s lab found they could more rapidly and effectively glean information from new brains with very little new training data to guide it.

One of her latest projects, supported by the Hypothesis Fund, a catalytic seed grant funding body that supports bold, early-stage scientific research aimed at tackling systemic risks to human and planetary health, is especially ambitious: mapping the brain’s diversity at the level of individual cell types. The idea here is to use AI to generate algorithmic “descriptions” for each cell, a type of code that captures what each one does in a system so complex we don’t yet have words for it.

“You can’t really describe a neuron’s function with natural language alone, especially with all of its complex functions in the brain,” Dyer says. “But maybe we can describe it with code. Our hope is that large language models trained for programming can help us write algorithms that describe the behavior of different neurons in the brain. We’re building a new language to talk about the brain with the help of this seed fund.”

That language, she hopes, could help researchers understand which cells are affected in diseases like Alzheimer’s or design more precise interventions for disorders like OCD, where new tools might one day block unwanted neural patterns in real time.

But for Dyer, none of this is about reducing the brain or AI to a simple formula.

“AI and the brain are both black boxes in many ways,” she says. “It’s tempting to think we’ll crack them open and see exactly how they work, but the truth is a lot more complicated. What’s exciting is that as we’ve started to scale up, we’re finding signals that we never knew were there in the data. That means we need new ways to represent brain data sets and new ways to ask questions from these data.”

And the questions are growing more complex, not less.

“In science, it can feel attractive to control everything in an experiment,” Dyer notes. “But that often removes the richness of the real system, especially with the brain. I want to embrace this complexity, not avoid it.”

That spirit fits well at Penn Engineering, where Dyer is energized by the School’s deep commitment to interdisciplinary research, its proximity to Penn Medicine and the vibrant, walkable community she now calls home.

“Penn is a place that encourages interdisciplinarity, both in the culture and through the physical location of the schools I work with — we are all within a few blocks of each other,” says Dyer. “There’s also a real momentum around AI here, with the new major, new faculty and centers. Penn is investing in infrastructure, talent and space to do this right, and I’m excited to be a part of this community pushing the boundaries of the field.”

That includes fostering the next generation of thinkers and builders. This November, Dyer is organizing a buildathon aimed at researchers passionate about using AI to explore the brain. The event will bring together developers in AI and neuroscience to explore and contribute to Dyer and her team’s new open source software package torch brain, for designing and scaling AI models. Their goal is to help build standards and benchmarks that will propel the field forward, and create new tools for brain-machine interfaces, neural data analysis and beyond. Learn more and access tutorials here.

For potential collaborators, graduate students and mentees that would like to work with Dyer, you can learn more about her work by visiting her research website.