Through programs like the Raj and Neera Singh Program in Artificial Intelligence, the first Ivy League undergraduate degree of its kind, Penn Engineering is addressing the critical demand for specialized engineers and empowering students to be leaders in AI’s responsible use. We sat down with Chris Callison-Burch, Associate Professor in Computer and Information Science, who shares his own vision of an AI-integrated future.

You have been working on language learning models for decades. How did your research perspective shift after the release of GPT-3, the predecessor of Chat-GPT?

In the early 2000’s, researchers, including myself, thought that getting language models to produce human-quality language would be extremely difficult. In fact, most of the people in my field had shifted their attention away from the Turing Test towards other more immediately quantifiable goals. When GPT-3 came out, I realized that AI-generated language was here, much sooner than I anticipated, and I was startled with the quality of the language it could produce.

Honestly, the release of GPT-3 spurred a career existential crisis for me. I quickly realized that these AI language models were both revelatory and slightly terrifying. I had to think about how to position myself to help advance this technology and I questioned if academia was the right place to do this.

Why were you questioning academia and what convinced you to stay?

With the amount of data and computing power needed to train large language models, or LLMs (think a Google-sized data center), I wasn’t sure if academic environments would be able to contribute much. I ended up staying because I carefully thought through other contributions academic researchers can make to advance AI.

Academics can use open-source models such as Meta’s model, Llama, to adapt models to their own use cases following other great use cases for open source models in healthcare provider systems or within government. However, a widely accessible and powerful LLM comes with serious repercussions since harmful use cases that are discovered after a model’s release are hard to limit. Without proper regulation and mandated updates, this tool could lead to harmful uses, including spreading misinformation, hate speech and dangerous suggestions. As researchers, it is our responsibility to mitigate those outcomes, and that alone is a very important role.

Why is policy and regulation around a tool like this so important?

We can look at social media as a lesson learned about how the government failed to regulate a revolutionary tool. AI is like that and bigger. It’s an internet-level transformation that will reshape how we interact with information in incredibly interesting ways. It’s important to not be naive about our previous failures in computer science and tech advancements in the past. Something as innocent as an online platform created to share photos with friends quickly turned into a place for misinformation and detachment from in-person relationships. We need to be able to anticipate challenges, mitigate them and teach our students how to improve these tools.

What future challenges are you aware of and how do we as humans work with AI instead of competing against it?

When I testified before Congress last summer, I warned that rapid AI advancements might give rise to mass unemployment. I understand that many people are experiencing that same sense of panic that I initially had when I tried GPT-3 for the first time. Artists and writers are worried that their work will be devalued. AI tools don’t only threaten the livelihoods of creative professionals, they can also write code, so even my own computer science degree that was once in high demand is being threatened.

My advice to anyone who is worried about AI taking their job is to experiment. Find out how you can incorporate AI into your work and use it to help you identify the skills you have that a machine could never take away. This is a truly transformative technology that will shape many aspects of our lives. I hope that it is for the better. I optimistically believe that AI will enable us all to become more productive workers, and more creative in our artistic pursuits.

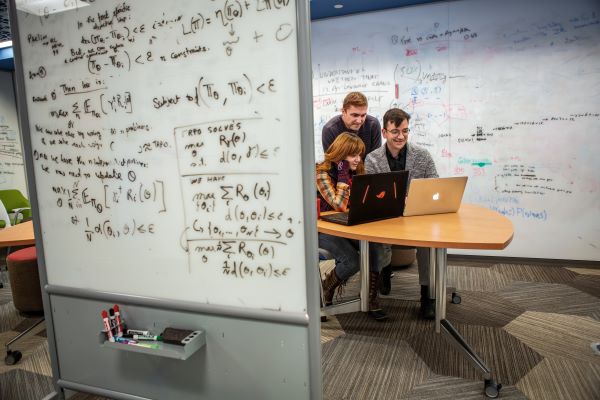

What kind of projects are your students currently involved in that keep your work relevant in the field?

The field is growing fast; it’s seemingly on an exponential growth curve and current research is only the tip of the iceberg. My lab has just finished a study on how well AI detection software works. This type of software is already being used to detect AI-generated essays, but it can be helpful in mitigating the spread of misinformation. We found that they are good at detecting copy-and-pasted, GPT-generated text, but any changes quickly crumble the software’s abilities.

We are also working on a few tools for software developers to build their own systems on top of chat-based models. Those include DataDreamer, which manages complex prompting workflows and synthetic data and converts those workflows into smaller models, and Kani, a tool used to design Python programs that include AI-callable functions such as checking the weather or anything else you’d want AI to do.

What about external collaborators?

We are working with the Allen Institute for Artificial Intelligence (AI2) on a project called “Holodeck,” which is at the intersection of computer graphics and natural language processing. It’s a program that builds realistic 3D indoor rooms with prompts. For example, you can tell it to arrange an apartment for a couple with a toddler, and Holodeck will design an apartment with items such as a high chair and crib. AI2 can then train their robots on models based on the 3D room to help them learn faster in the real environment. This project touches on many disciplines and helps to advance real-world tools.

How do you see Penn Engineers stepping into leadership roles in this new AI-integrated world?

Our students will likely be creating new AI tools that can be integrated into all industries. For example, engineers and physicians came together to create a smart phone app that records the interaction between a doctor and their patient to take notes in real-time and allow the doctor to be more present with their patient. It not only transcribes the conversation but suggests a course of action and pre-populates prescription information or needed follow-up appointments. I see our graduates developing new AI tools like this that free people from tedious administrative tasks, allowing them to reach their creative potential and get back to the parts of their work that have a real impact.

We are really in the time of “if you can imagine it, you can build it.” I see our students graduating and founding a thousand startups, businesses, models and new approaches for how to integrate AI tools into current industries, and I am extremely excited to get to play a part in guiding that.