Scientists run into a lot of tradeoffs trying to build and scale up brain-like systems that can perform machine learning. For instance, artificial neural networks are capable of learning complex language and vision tasks, but the process of training computers to perform these tasks is slow and requires a lot of power.

Training machines to learn digitally but perform tasks in analog—meaning the input varies with a physical quantity, such as voltage—can reduce time and power, but small errors can rapidly compound. An electrical network that physics and engineering researchers from the University of Pennsylvania previously designed is more scalable because errors don’t compound in the same way as the size of the system grows, but it is severely limited as it can only learn linear tasks, ones with a simple relationship between the input and output.

Now, the researchers have created an analog system that is fast, low-power, scalable, and able to learn more complex tasks, including “exclusive or” relationships (XOR) and nonlinear regression. This is called a contrastive local learning network; the components evolve on their own based on local rules without knowledge of the larger structure. Physics professor Douglas J. Durian compares it to how neurons in the human brain don’t know what other neurons are doing and yet learning emerges.

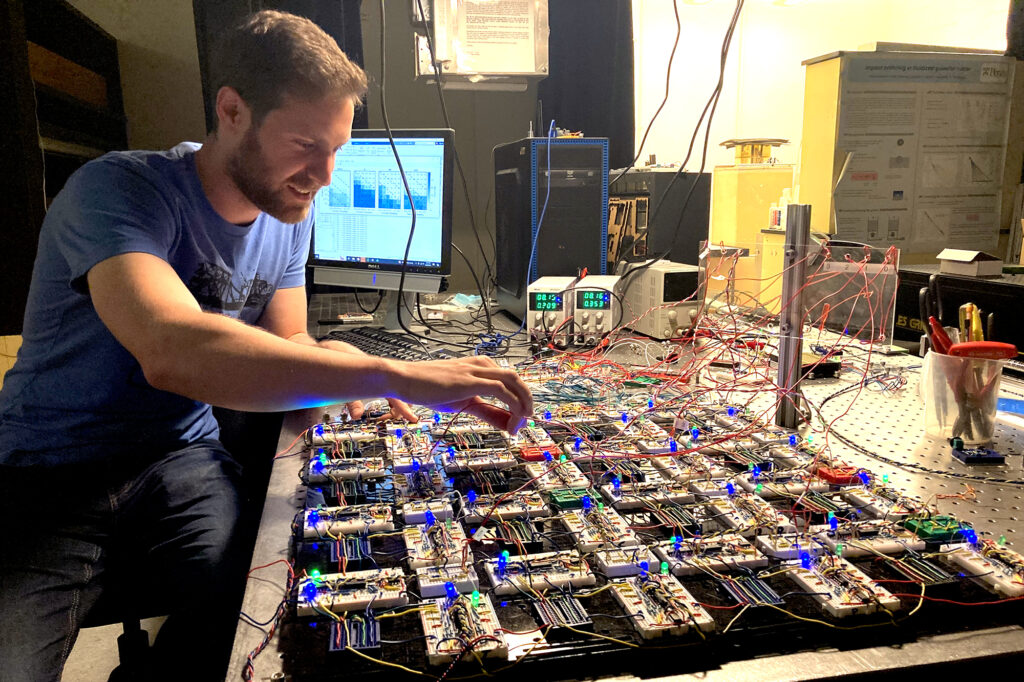

“It can learn, in a machine learning sense, to perform useful tasks, similar to a computational neural network, but it is a physical object,” says physicist Sam Dillavou, a postdoc in the Durian Research Group and first author on a paper about the system published in Proceedings of the National Academy of Sciences.

“One of the things we’re really excited about is that, because it has no knowledge of the structure of the network, it’s very tolerant to errors, it’s very robust to being made in different ways, and we think that opens up a lot of opportunities to scale these things up,” engineering professor Marc Z. Miskin says.

This story was written by Erica Moser. To read the full article, please visit Penn Today.