By now, the challenges posed by generative AI are no secret. Models like OpenAI’s ChatGPT, Anthropic’s Claude and Meta’s Llama have been known to “hallucinate,” inventing potentially misleading responses, as well as divulge sensitive information, like copyrighted materials.

One potential solution to some of these issues is “model disgorgement,” a set of techniques that force models to purge themselves of content that leads to copyright infringement or biased responses.

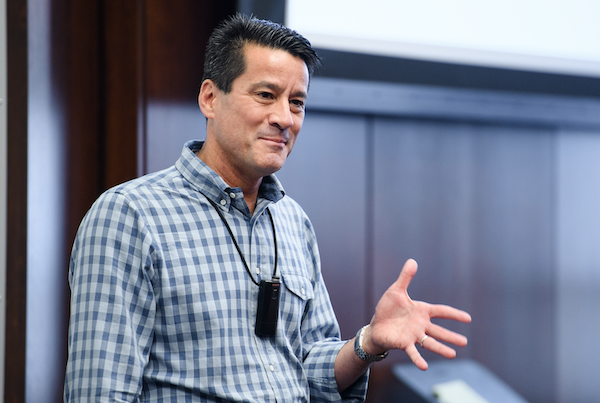

In a recent paper in Proceedings of the National Academy of Sciences (PNAS), Michael Kearns, National Center Professor of Management & Technology in Computer and Information Science (CIS), and three fellow researchers at Amazon share their perspective on the potential for model disgorgement to solve some of the issues facing AI models today.

Read a Q&A with Kearns about the paper on the Penn Engineering AI site.